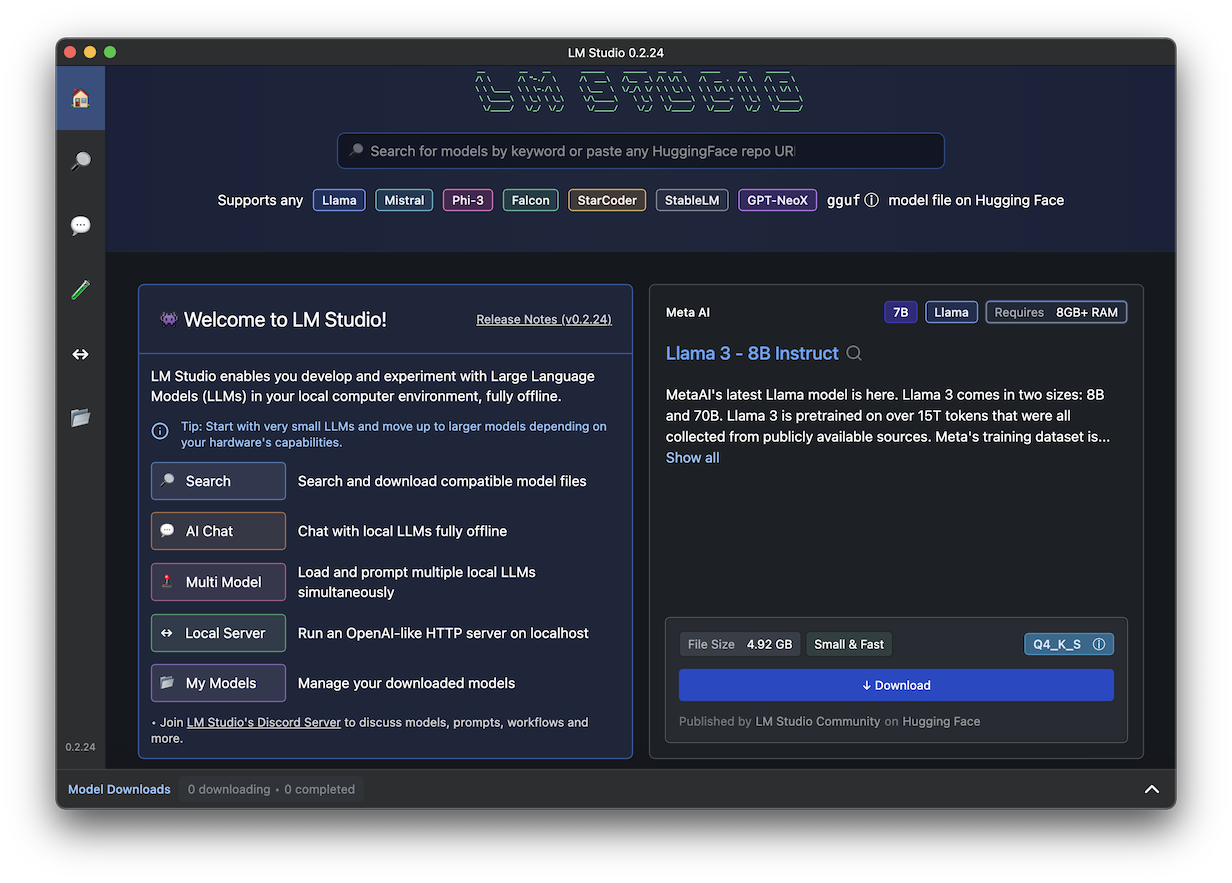

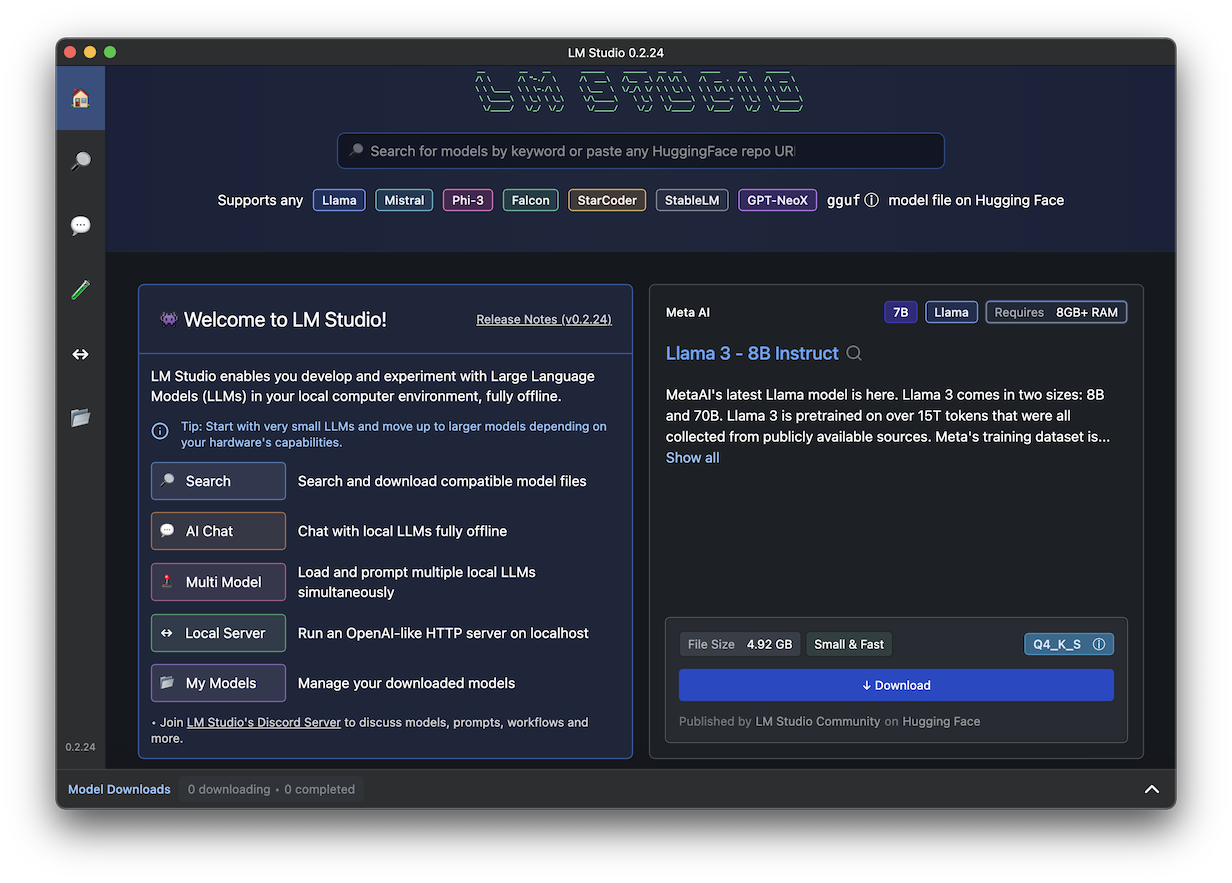

https://lmstudio.ai/Download the version for your OS and install it.

On the right side, in the "Llama 3 - 8B Instruct" section, click the Download button, as shown below.

This model was trained on data up to March 2023.

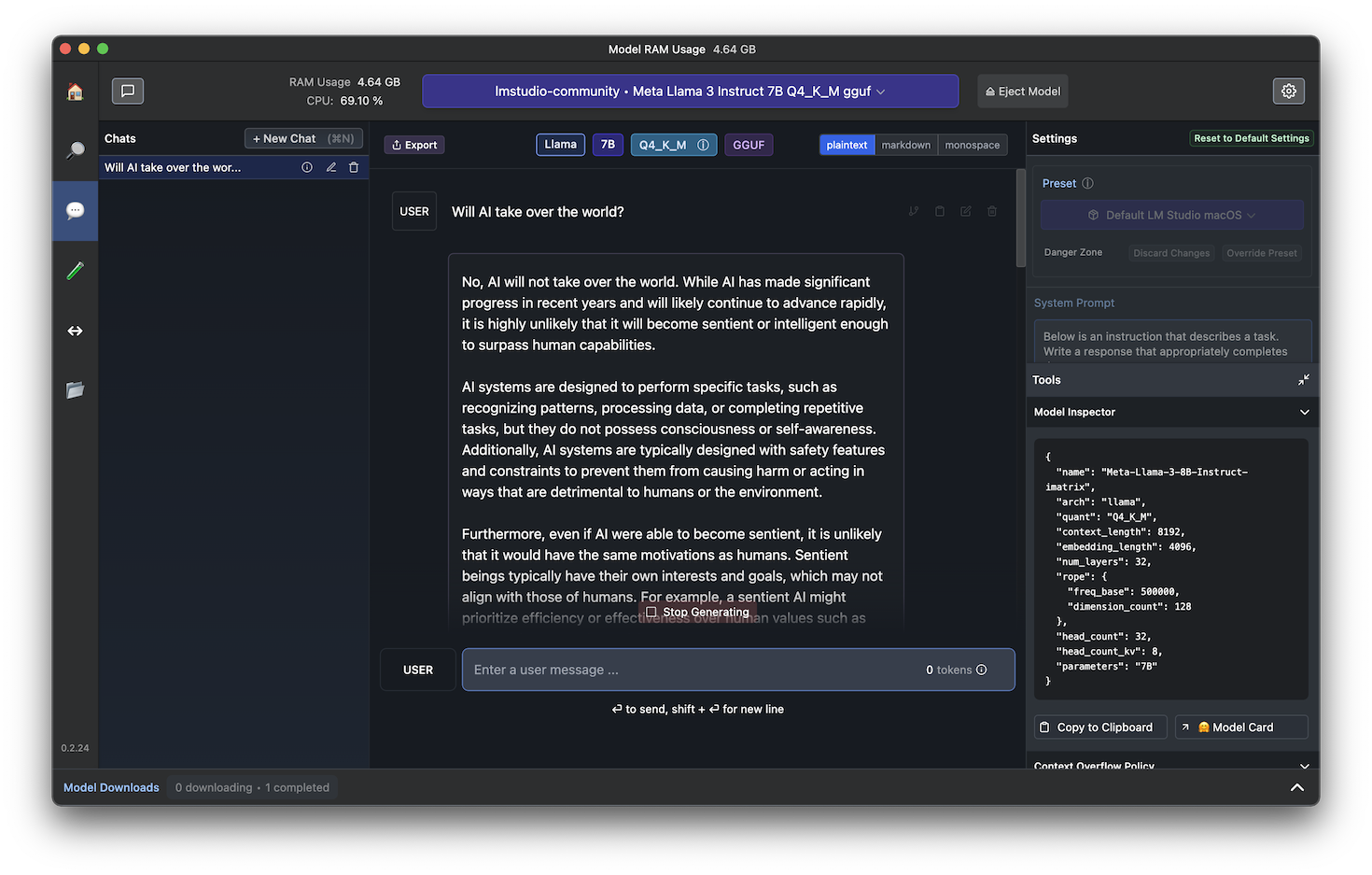

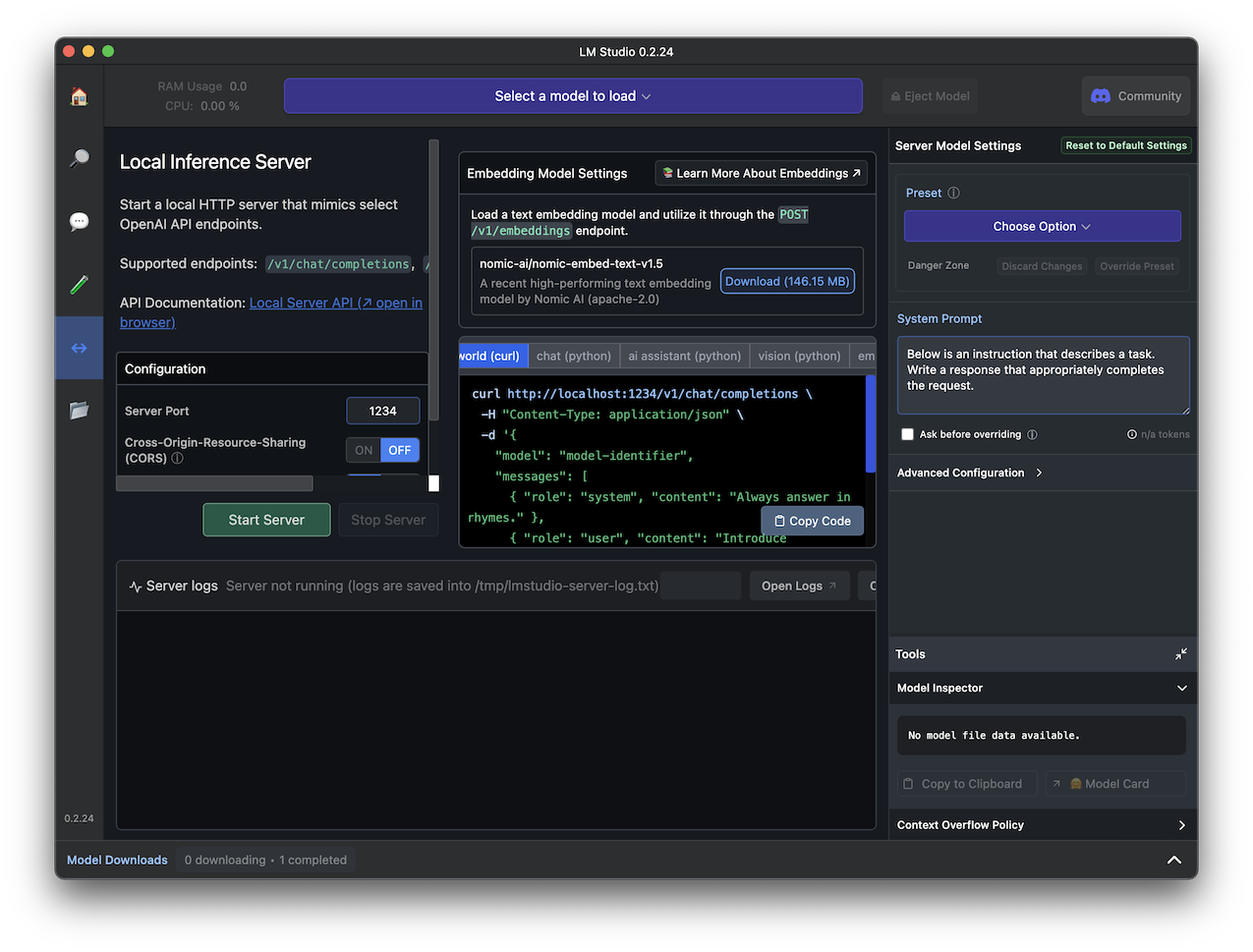

At the top center, click the "Select a model to load" button.

Select the model you just downloaded.

Enter a question in the USER box at the lower center, and an answer appears at the top, as shown below.

If the text starts repeating endlessly, which it did with I tried it, and a "Stop generating" box pops up, click it.

If you wanted to add documents to the prompt, you'd have to paste the text into the chat window along with your prompt.

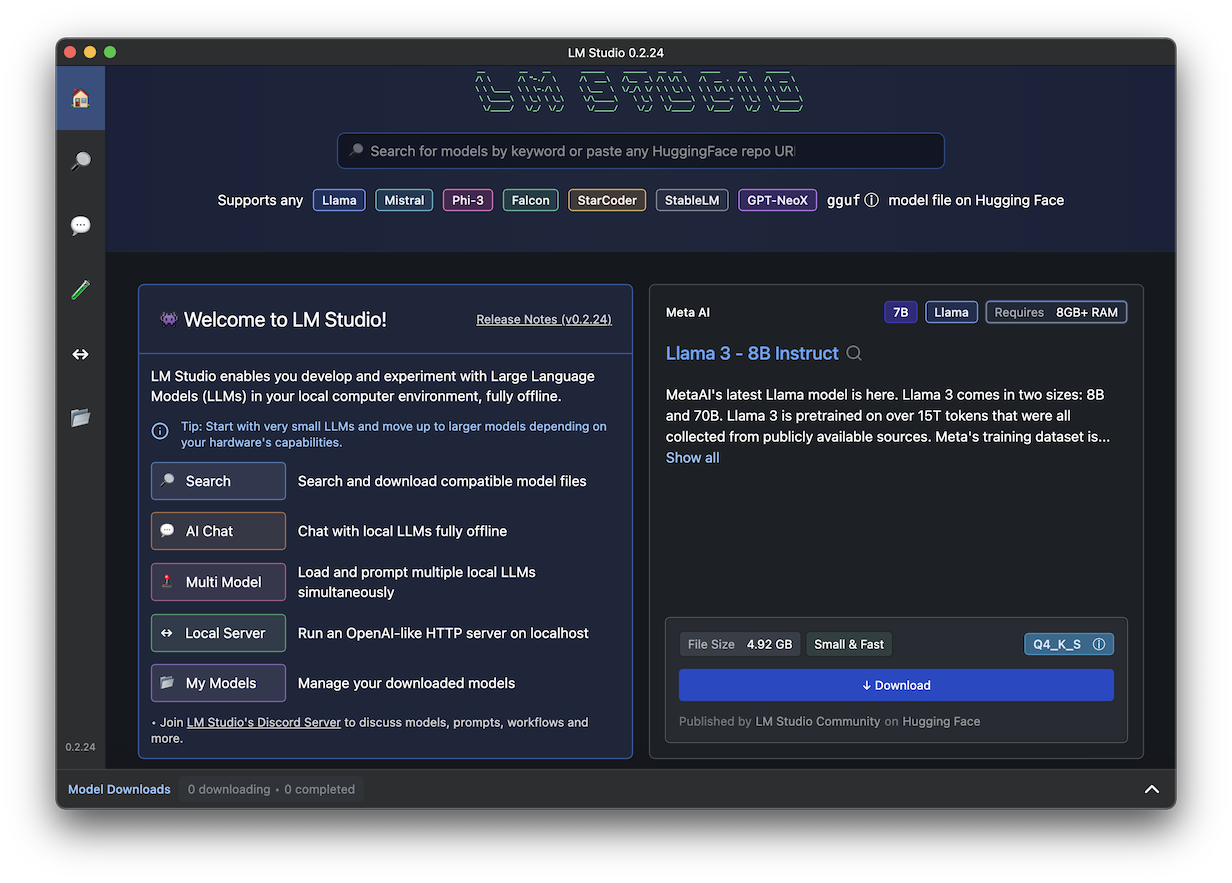

In the lower left, in the "Welcome to LM Studio!" pane, click the "Local Server" button, as shown below.

/v1/chat/completionThe server will listen on local port 1234

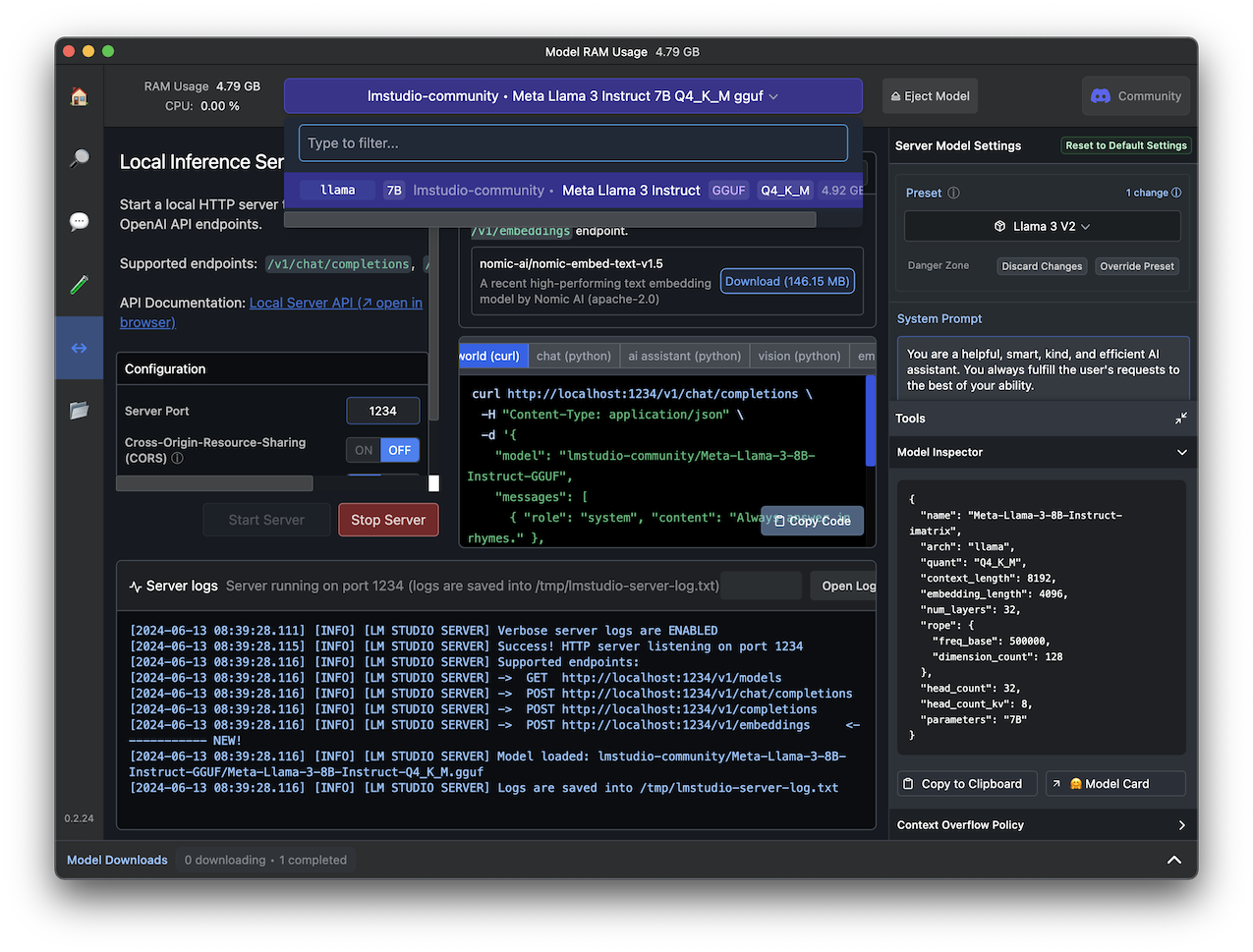

A box pops up saying "Select a model to load".

Click the model you downloaded previously, "Meta Llama 3 instruct".

When the model loads, the server starts, and the lower left pane starts showing log entries, as shown below.

https://useanything.com/Click the "Download AnythingLLM for Desktop" button.

Download the version for your OS and install it.

At the Welcome screen, click the "Get started" button.

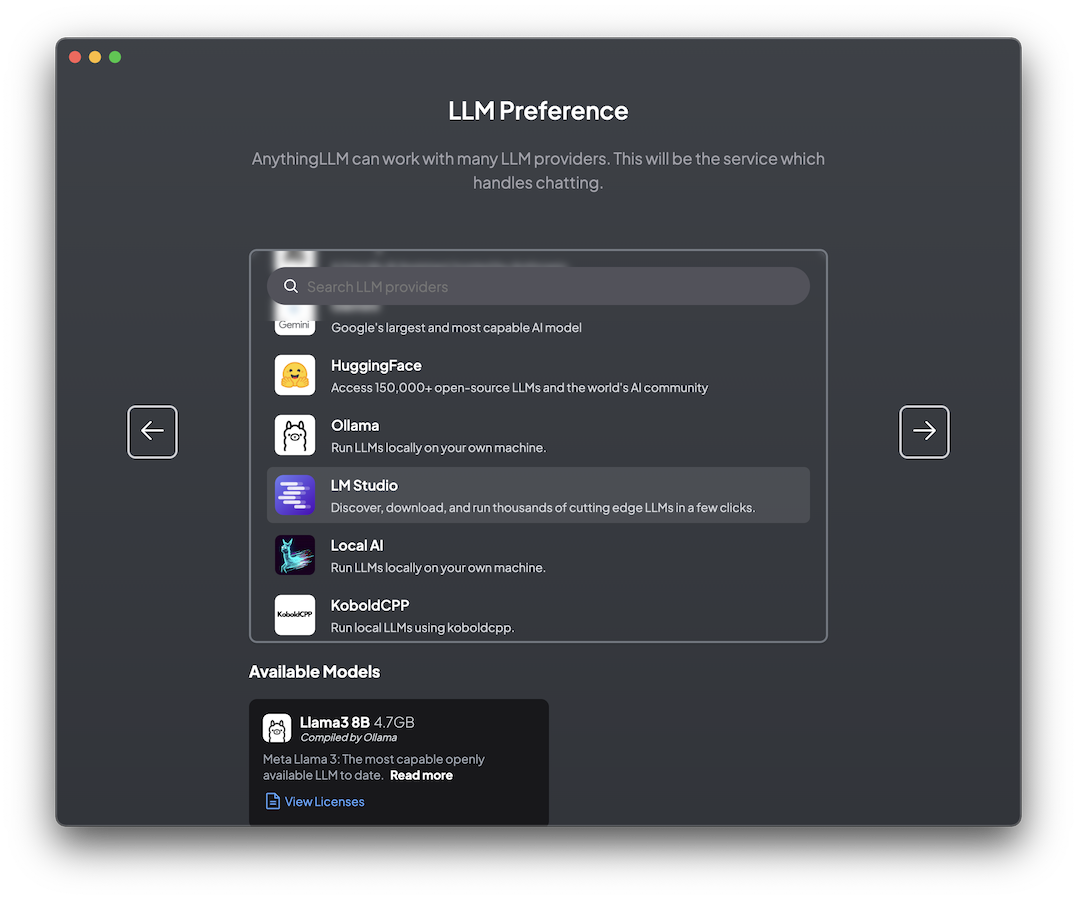

On the LLM Preference screen, scroll down and click "LM Studio".

At the bottom left, enter a "LMStudio BaseURL" of:

http://localhost:1234/v1At the bottom right, enter a "Token context window" of 4096, as shown below.

On the "Embedding Preference" page, click the right-arrow.

On the "Vector Database Connection" page, click the right-arrow.

On the "Data Handling ≈ Privacy" page, click the right-arrow.

On the "Welcome to AnythingLLM" page, click "Skip Survey".

On the "Create your first workspace" page, enter a name of ML128 and press Enter.

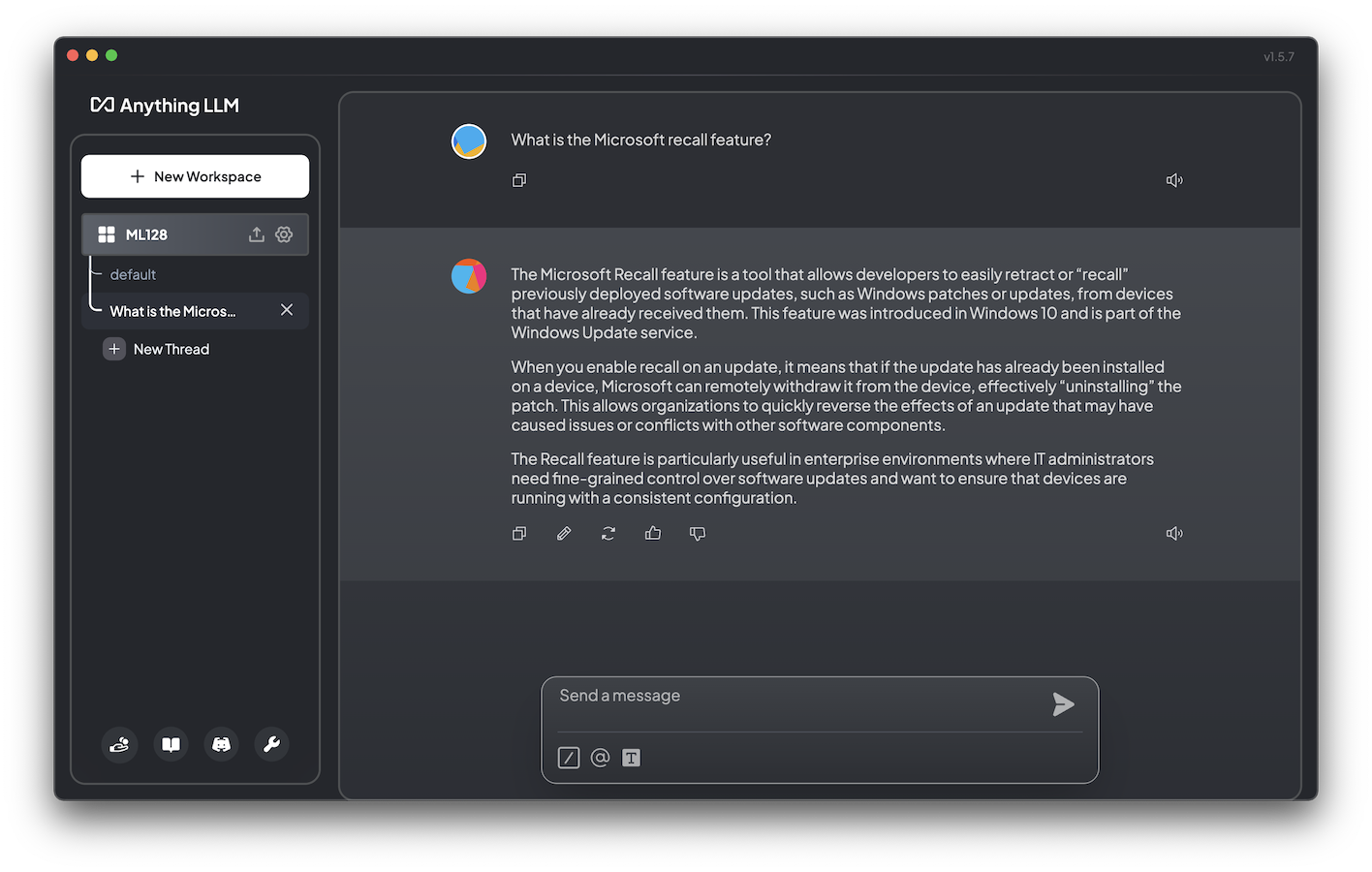

What is the Microsoft recall feature?Click the right-arrow to the right of the message to process the prompt.

The answer is wrong, as shown below, because Microsoft Recall was accounced on May 20, 2024. However, Llama 3 was trained only on data up to March 2023.

The LLM guesses from the name, making up a feature that does not exist.

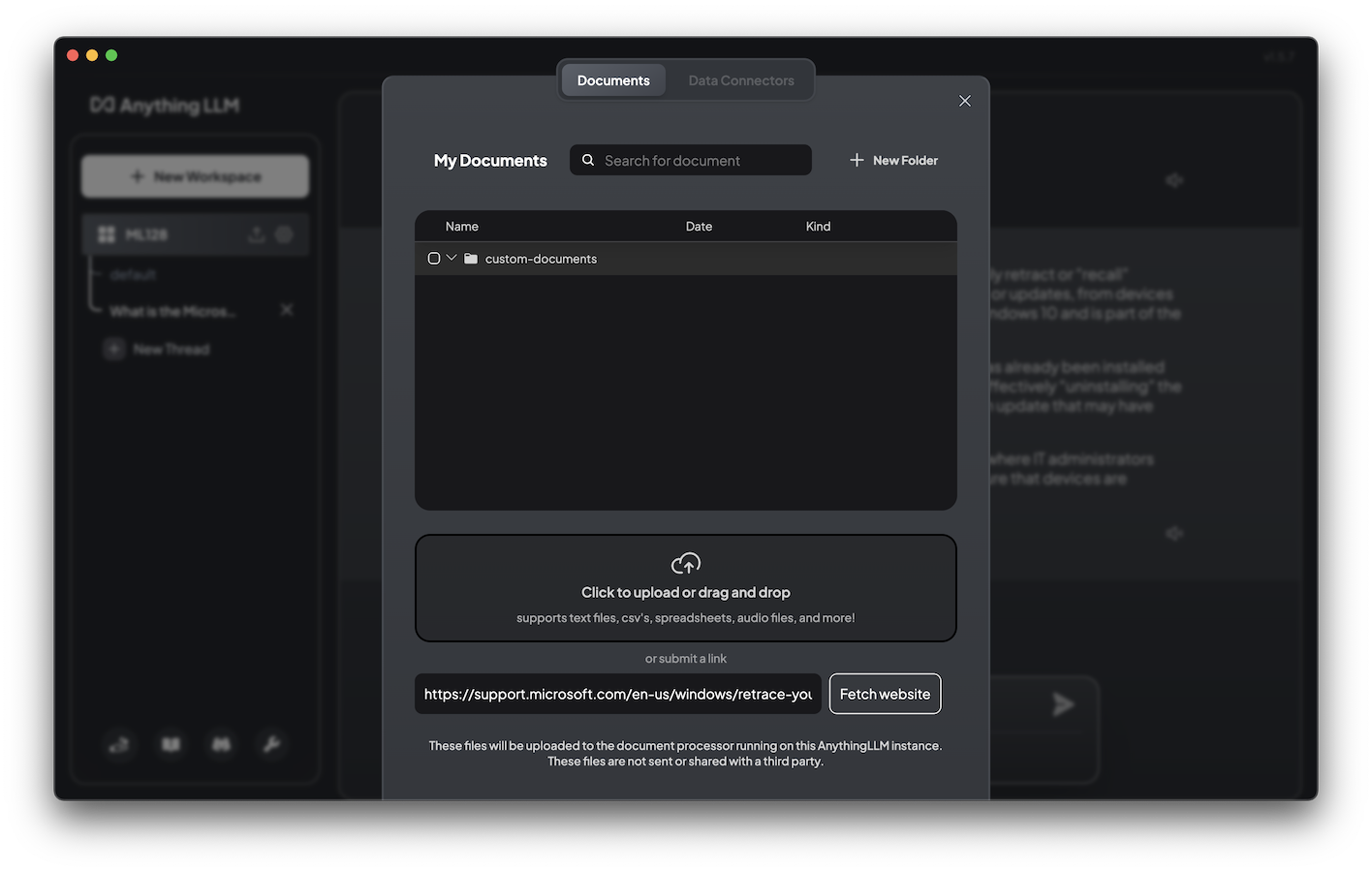

At the bottom, in the "or submit a link" box, enter this URL, as shown below.

https://support.microsoft.com/en-us/windows/retrace-your-steps-with-recall-aa03f8a0-a78b-4b3e-b0a1-2eb8ac48701c

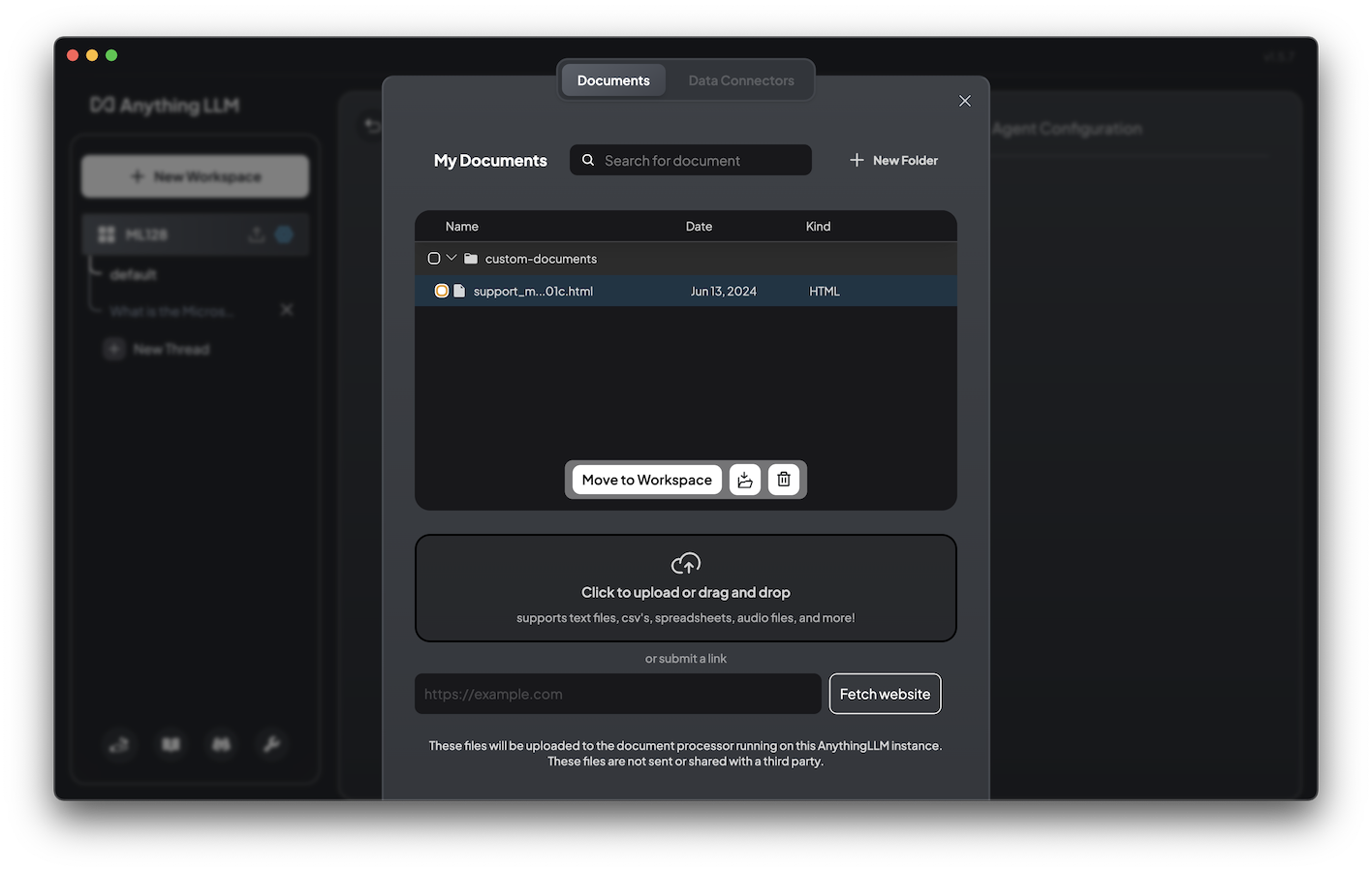

When the link has been uploaded, the document appears in the center pane.

Click the check box to the left of the website name.

In the lower center, click the "Move to Workspace" button, as shown below.

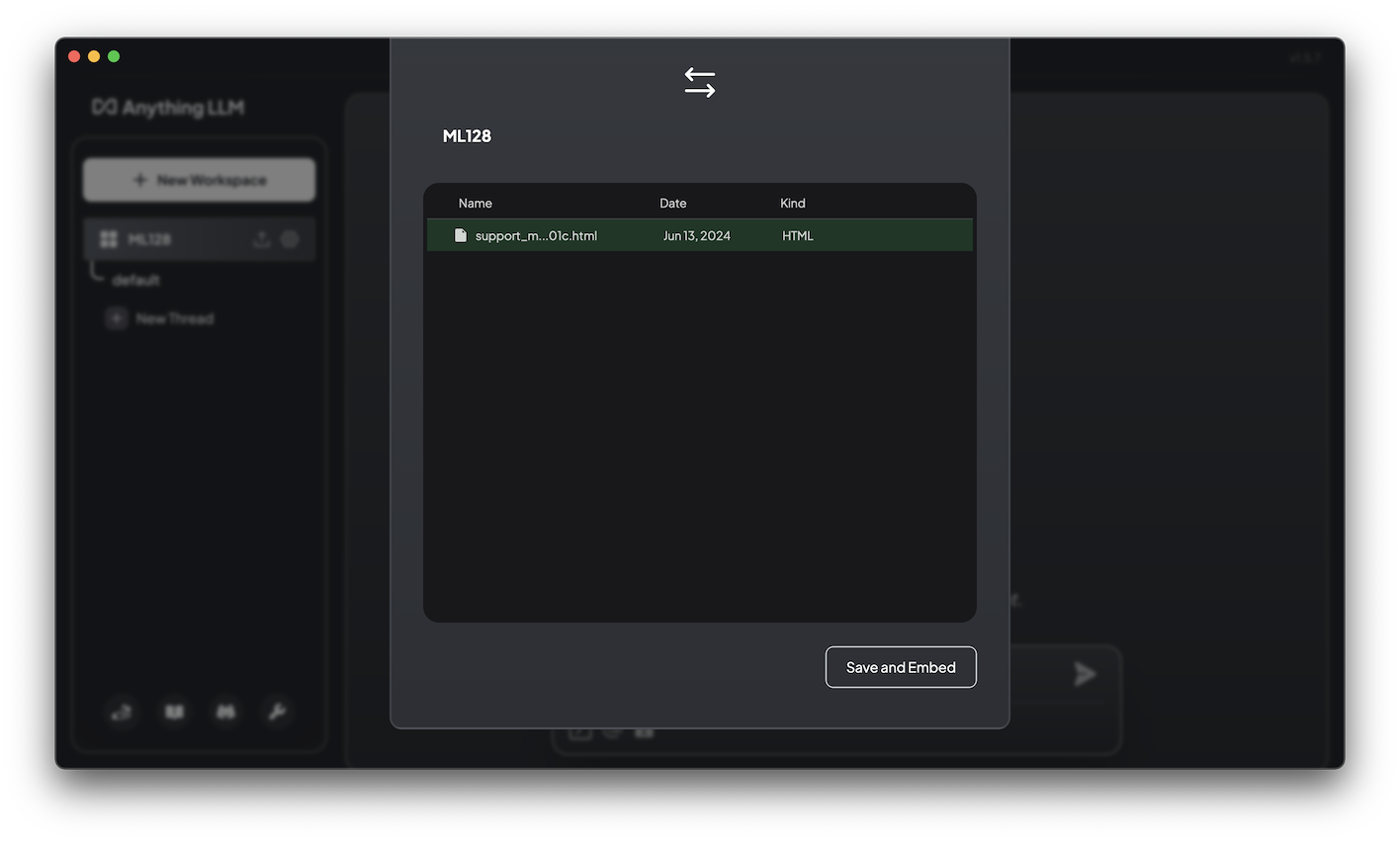

Click the "support..." docment name.

At the bottom, click the "Save and Embed" button.

At the lower right, enter this prompt:

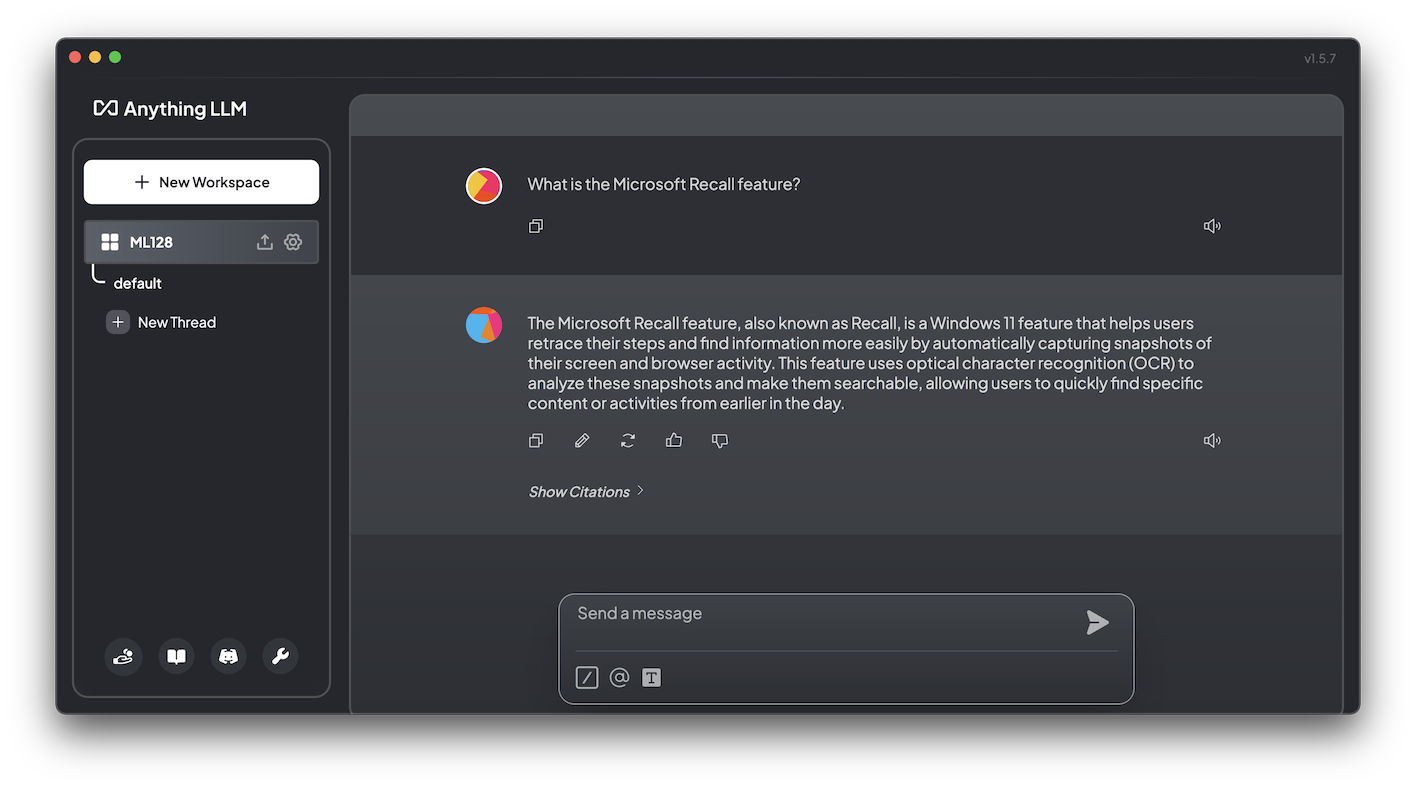

What is the Microsoft recall feature?Click the right-arrow to the right of the message to process the prompt.

The answer is much better, as shown below.

ML 128.1: Sum of Sentiments (10 pts)

In the left pane, at the bottom, click the wrench icon.In the left pane, in the INSTANCE SETTINGS list, click "Workplace Chat".

The flag is covered by a green rectangle in the image below.

Configure LM Studio for Apple Silicon: Run Local LLMs with faster time to completion.

Posted 6-13-24