Logstash is an open source tool for collecting, parsing, and storing logs for future use. Kibana is a web interface that can be used to search and view the logs that Logstash has indexed. Both of these tools are based on Elasticsearch, which is used for storing logs.

Create a new virtual machine and install it. The procedure is the same as you used to install Security Onion.

On your server, in a Terminal window, execute these commands:

sudo apt update

sudo apt install openssh-server -y

ifconfig

If you are using a PC, you probably don't have an SSH client, so use PuTTY.

ssh student@172.16.1.192

Now you can copy and paste commands into the SSH client, which will make the process much easier.

sudo apt install software-properties-common -y

Execute these commands:

sudo add-apt-repository -y ppa:webupd8team/java

sudo apt update

sudo apt -y install oracle-java8-installer

wget -qO - https://packages.elastic.co/GPG-KEY-elasticsearch | sudo apt-key add -

echo "deb http://packages.elastic.co/elasticsearch/2.x/debian stable main" | sudo tee -a /etc/apt/sources.list.d/elasticsearch-2.x.list

sudo apt update

sudo apt -y install elasticsearch

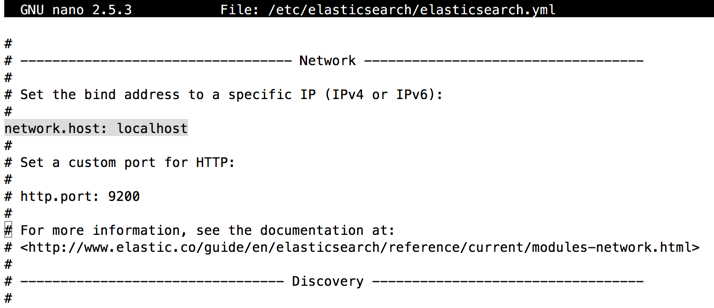

sudo nano /etc/elasticsearch/elasticsearch.yml

network.host: localhost

Press Ctrl+X, Y, Enter to save the file.

Execute these commands:

sudo systemctl restart elasticsearch

sudo systemctl daemon-reload

sudo systemctl enable elasticsearch

sudo netstat -pant

echo "deb http://packages.elastic.co/kibana/4.5/debian stable main" | sudo tee -a /etc/apt/sources.list

sudo apt-get update

sudo apt install kibana

sudo nano /opt/kibana/config/kibana.yml

server.host: "localhost"

Press Ctrl+X, Y, Enter to save the file.

Execute these commands:

sudo systemctl daemon-reload

sudo systemctl enable kibana

sudo systemctl start kibana

sudo netstat -pant

sudo apt-get -y install nginx

sudo -v

echo "kibanaadmin:`openssl passwd -apr1`" | sudo tee -a /etc/nginx/htpasswd.users

sudo nano /etc/nginx/sites-available/default

Paste in this:

server {

listen 80;

server_name 172.16.1.192;

auth_basic "Restricted Access";

auth_basic_user_file /etc/nginx/htpasswd.users;

location / {

proxy_pass http://localhost:5601;

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection 'upgrade';

proxy_set_header Host $host;

proxy_cache_bypass $http_upgrade;

}

}

Press Ctrl+X, Y, Enter to save the file.

This configures Nginx to direct your server's HTTP traffic to the Kibana application, which is listening on localhost:5601. Also, Nginx will use the htpasswd.users file, that we created earlier, and require basic authentication.

Now, we'll check the config for syntax errors and restart Nginx if none are found:

Execute these commands:

sudo nginx -t

sudo systemctl restart nginx

echo "deb http://packages.elastic.co/logstash/2.3/debian stable main" | sudo tee -a /etc/apt/sources.list

sudo apt-get update

sudo apt-get install logstash

sudo mkdir -p /etc/pki/tls/certs

sudo mkdir /etc/pki/tls/private

sudo nano /etc/ssl/openssl.cnf

subjectAltName = IP: 172.16.1.192

Press Ctrl+X, Y, Enter to save the file.

Now generate the SSL certificate and private key in the appropriate locations (/etc/pki/tls/...), with the following commands:

cd /etc/pki/tls

sudo openssl req -config /etc/ssl/openssl.cnf -x509 -days 3650 -batch -nodes -newkey rsa:2048 -keyout private/logstash-forwarder.key -out certs/logstash-forwarder.crt

First create the input file with this command:

sudo nano /etc/logstash/conf.d/02-beats-input.conf

input {

beats {

port => 5044

ssl => true

ssl_certificate => "/etc/pki/tls/certs/logstash-forwarder.crt"

ssl_key => "/etc/pki/tls/private/logstash-forwarder.key"

}

}

Press Ctrl+X, Y, Enter to save the file.

This specifies a beats input that will listen on TCP port 5044, and it will use the SSL certificate and private key that we created earlier.

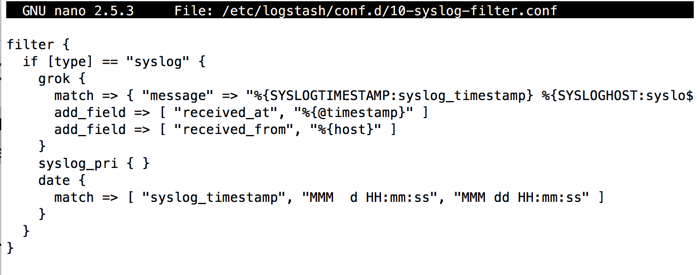

Create the filter file with this command:

sudo nano /etc/logstash/conf.d/10-syslog-filter.conf

filter {

if [type] == "syslog" {

grok {

match => { "message" => "%{SYSLOGTIMESTAMP:syslog_timestamp} %{SYSLOGHOST:syslog_hostname} %{DATA:syslog_program}(?:\[%{POSINT:syslog_pid}\])?: %{GREEDYDATA:syslog_message}" }

add_field => [ "received_at", "%{@timestamp}" ]

add_field => [ "received_from", "%{host}" ]

}

syslog_pri { }

date {

match => [ "syslog_timestamp", "MMM d HH:mm:ss", "MMM dd HH:mm:ss" ]

}

}

}

Press Ctrl+X, Y, Enter to save the file.

This filter looks for logs that are labeled as "syslog" type (by Filebeat), and it will try to use grok to parse incoming syslog logs to make it structured and query-able.

Create the output file with this command:

sudo nano /etc/logstash/conf.d/30-elasticsearch-output.conf

output {

elasticsearch {

hosts => ["localhost:9200"]

sniffing => true

manage_template => false

index => "%{[@metadata][beat]}-%{+YYYY.MM.dd}"

document_type => "%{[@metadata][type]}"

}

}

Press Ctrl+X, Y, Enter to save the file.

Execute this command to test your configuration files:

sudo /opt/logstash/bin/logstash --configtest -f /etc/logstash/conf.d/

If there are errors, check these three files for mistakes:

/etc/logstash/conf.d/02-beats-input.conf

/etc/logstash/conf.d/10-syslog-filter.conf

/etc/logstash/conf.d/30-elasticsearch-output.conf

Execute these commands:

sudo systemctl restart logstash

sudo /lib/systemd/systemd-sysv-install enable logstash

cd ~

sudo apt install curl

curl -L -O https://download.elastic.co/beats/dashboards/beats-dashboards-1.2.2.zip

sudo apt-get -y install unzip

unzip beats-dashboards-*.zip

cd beats-dashboards-*

./load.sh

cd ~

curl -O https://gist.githubusercontent.com/thisismitch/3429023e8438cc25b86c/raw/d8c479e2a1adcea8b1fe86570e42abab0f10f364/filebeat-index-template.json

curl -XPUT 'http://localhost:9200/_template/filebeat?pretty' -d@filebeat-index-template.json

The server is now ready. Next we need to configure clients to send log files to the server.

Execute these commands:

Note for Other Servers

These commands (or equivalent commands) have already been executed on your ELK server, but to gather data from other Ubuntu servers, you'd need to execute these commands now:scp /etc/pki/tls/certs/logstash-forwarder.crt user@client_server_private_address:/tmp sudo mkdir -p /etc/pki/tls/certs sudo cp /tmp/logstash-forwarder.crt /etc/pki/tls/certs/ echo "deb https://packages.elastic.co/beats/apt stable main" | sudo tee -a /etc/apt/sources.list.d/beats.list wget -qO - https://packages.elastic.co/GPG-KEY-elasticsearch | sudo apt-key add - sudo apt-get update

wget https://download.elastic.co/beats/filebeat/filebeat_1.3.1_amd64.deb

sudo dpkg -i filebeat_1.3.1_amd64.deb

sudo nano /etc/filebeat/filebeat.yml

We'll modify the existing prospector to send syslog and auth.log to Logstash. After the paths: line, add these two lines:

- /var/log/auth.log

- /var/log/syslog

Scroll down about 4 screens to find the line that specifies document_type:

Uncomment that line and change its value to "syslog", as shown below.

This specifies that the logs in this prospector are of type syslog (which is the type that our Logstash filter is looking for).

Now scroll several more pages down to the Elasticsearch as output section, as highlighted in the image below.

Scroll down several pages to find the end of this section, as highlighted in the image below.

You need to delete the entire Elasticsearch as output section, which is too large to see in a single screen.

To do that, scroll back up to the top of the Elasticsearch as output section, place the cursor in the first line, which contains elasticsearch:.

Then press Ctrl+K to delete that line.

Your screen should look like the image below:

Press Ctrl+K many more times, until the entire Elasticsearch as output section is gone, as shown below.

Don't delete the "### Logstash as output" line.

Below the "### Logstash as output" line, find the #logstash: line and delete the # to uncomment it, as shown below.

Two lines later, find the line that says

#hosts: ["localhost:5044"]

hosts: ["172.16.1.192:5044"]

This configures Filebeat to connect to Logstash on your ELK Server at port 5044 (the port that we specified a Logstash input for earlier).

Directly under the hosts entry, and with the same indentation, add this line, as shown below:

bulk_max_size: 1024

Scroll down a page or so to find the #tls: section, as shown below. By default this entire section is commented out.

Uncomment the #tls: line, so your screen looks like this:

and uncomment it. Then uncomment the line that starts with #certificate_authorities: and change it to this, as shown below:

certificate_authorities: ["/etc/pki/tls/certs/logstash-forwarder.crt"]

This configures Filebeat to use the SSL certificate that we created on the ELK Server.

Press Ctrl+X, Y, Enter to save the file.

Now execute these commands to restart Filebeat to put our changes into place:

sudo systemctl restart filebeat

sudo systemctl enable filebeat

sudo filebeat -c /etc/filebeat/filebeat.yml -configtest

Execute this command to see the Filebeat index:

curl -XGET 'http://localhost:9200/filebeat-*/_search?pretty'

If you get zero hits, something is wrong with your filebeat configuration file.

Troubleshooting

If you want to see Filebeat messages, edit the /etc/filebeat/filebeat.yml file and change the level to warning, as shown below.

Then restart filebeat and view the syslog with this command, as shown below.

tail /var/log/syslog

A box pops up, asking for credentials. Use these, as shown below.

kibanaadmin

kibana

You see a page prompting you to configure a default index pattern, as shown below.

Troubleshooting

If that password doesn't work, execute these commands:Enter this password twice, when you are prompted to: kibana

On the left side, click the entry beginning with [filebeat, as shown below.

Troubleshooting

If you get a "500 Internal Server Error" page, edit the /etc/nginx/htpasswd.users file and make sure it contains only one entry for "kibanaadmin".

On the next page, at the center top, click the green asterisk.

Troubleshooting

If there is no entry on the left side, click the "Use event times to create index names [DEPRECATED]" button and type in the pattern:[filebeat-[YYYY.MM.DD

Save it with a button on the bottom of the page.

At the top left, click Discover.

A histogram appears with green bars, showing the log entries for the last 15 minutes, as shown below.

Capture a whole-desktop image and save it as "Proj 1x from YOURNAME".

YOU MUST SEND IN A WHOLE-DESKTOP IMAGE FOR FULL CREDIT

Send a Cc to yourself.

Last Modified: 10-10-17 9:42 pm

Revised with "index pattern" troubleshooting 11-21-17

Revised with more troubleshooting and tests 12-11-17 5 am

Revised fpr installing unanthenticated packates 12-12-17