The added code looks like this:

<div style="position:absolute;top:-13201px;">rolex explorer,rolex,u boat,rado,zenith,<a href="http://www.doshopsells.net/rolex-watches-4.html">fake rolex for sale</a>,franck muller,rolex masterpiece,emporio armani,rolex milgauss,cartier,rolex yachtmaster,<a href="http://www.doshopsells.net/">replica watches</a>,a lange sohne,roger dubuis,chopard,breitling</div>

That has a chance of fixing the problem, but it leaves these issues unresolved:

A hasty action like a simple password change is likely to alert the attacker that he (or she) has been detected, but not really keep them out. That may make things worse--the attacker may switch to a less detectable attack.

echo 1 >/proc/sys/net/ipv4/ip_forward

iptables -t nat -A PREROUTING -p tcp --dport 80 -j DNAT --to-destination 1.2.3.4

iptables -t nat -A PREROUTING -p tcp --dport 20:21 -j DNAT --to-destination 1.2.3.4

iptables -t nat -A PREROUTING -p tcp --dport 1025:65535 -j DNAT --to-destination 1.2.3.4

iptables -t nat -A POSTROUTING -p tcp -d 1.2.3.4 --dport 80 -j MASQUERADE

iptables -t nat -A POSTROUTING -p tcp -d 1.2.3.4 --dport 20:21 -j MASQUERADE

iptables -t nat -A POSTROUTING -p tcp -d 1.2.3.4 --dport 1025:65535 -j MASQUERADE

Edit /etc/hosts and add this line

2.2.2.2 www.example.com

ipconfig /flushdns

sudo killall -HUP mDNSResponder

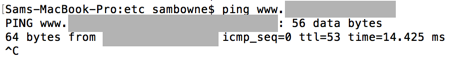

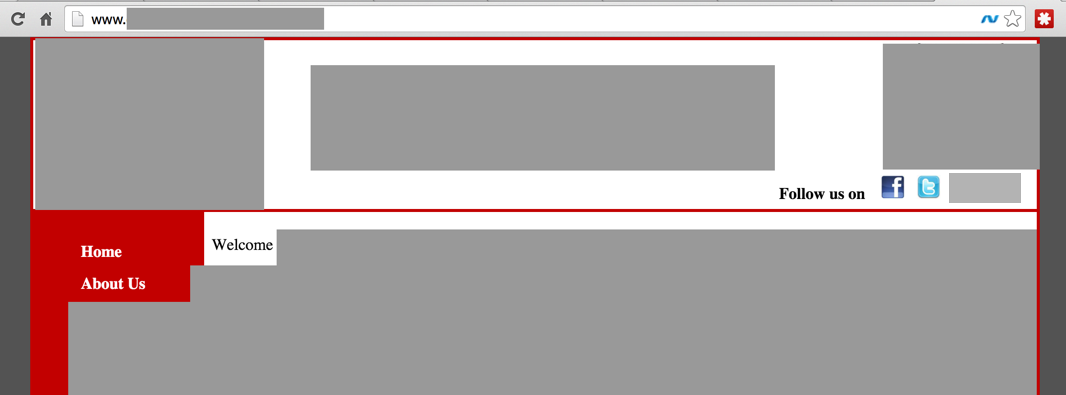

ping www.example.com

tcpdump -pn -C 100 -W 100 -w capture &

#!/usr/bin/python

import os, urllib2, hashlib, time

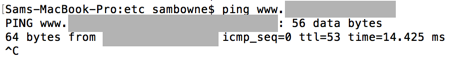

pages = ["http://www.example.com/index.html", "http://www.example.com/aboutus.html", "http://www.example.com/registration.html", "http://www.example.com/schedule.html", "http://www.example.com/past.html", "http://www.example.com/tournaments.html", "http://www.example.com/rules.html", "http://www.example.com/gymdir.html"]

summary = ''

for page in pages:

response = urllib2.urlopen(page)

html = response.read()

h = hashlib.new('md5', html).hexdigest()

print time.strftime("%c") + '\t' + page + '\t' + h

summary += h

print "Summary: ", time.strftime("%c"), hashlib.new('md5', summary).hexdigest()

I executed this command to set up the cron job:

crontab -e

*/1 * * * * /root/tripwire.py >> /root/triplog

Wed Dec 2 16:15:01 2015 http://www.example.com/index.html 5ad98230901d54238308e97f989400f7

Wed Dec 2 16:15:01 2015 http://www.example.com/aboutus.html 366c4556a8225d7a86a08941af21fcf8

Wed Dec 2 16:15:01 2015 http://www.example.com/registration.html 8c996f780191a18382ee073e0591085c

Wed Dec 2 16:15:02 2015 http://www.example.com/schedule.html a15136f01ed132e7edc502d6d48c947a

Wed Dec 2 16:15:02 2015 http://www.example.com/past.html 648e465998e07d738d93c43e9f83460f

Wed Dec 2 16:15:02 2015 http://www.example.com/tournaments.html 189e706a2d31430d003ce8ef8537a403

Wed Dec 2 16:15:02 2015 http://www.example.com/rules.html 26e7a3d8a549804ca80e5956290cd044

Wed Dec 2 16:15:02 2015 http://www.example.com/gymdir.html 588e0e7f5275086ff3e72329e68ef097

Summary: Wed Dec 2 16:15:02 2015 46f002f88670ddf053608076e66dac70

Wed Dec 2 16:16:01 2015 http://www.example.com/index.html 5ad98230901d54238308e97f989400f7

Wed Dec 2 16:16:01 2015 http://www.example.com/aboutus.html 366c4556a8225d7a86a08941af21fcf8

Wed Dec 2 16:16:02 2015 http://www.example.com/registration.html 8c996f780191a18382ee073e0591085c

Wed Dec 2 16:16:02 2015 http://www.example.com/schedule.html a15136f01ed132e7edc502d6d48c947a

Wed Dec 2 16:16:02 2015 http://www.example.com/past.html 648e465998e07d738d93c43e9f83460f

Wed Dec 2 16:16:02 2015 http://www.example.com/tournaments.html 189e706a2d31430d003ce8ef8537a403

Wed Dec 2 16:16:02 2015 http://www.example.com/rules.html 26e7a3d8a549804ca80e5956290cd044

Wed Dec 2 16:16:02 2015 http://www.example.com/gymdir.html 588e0e7f5275086ff3e72329e68ef097

Summary: Wed Dec 2 16:16:02 2015 46f002f88670ddf053608b76e66dac70

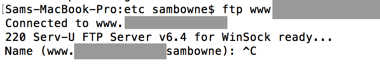

How to redirect traffic to another machine in Linux

Last modified: 12-2-15 2 pm